Branch detection with HDBSCAN* has now been incorporated in scikit-learn.

In data science, exploratory data analysis (EDA) is essential for discovering patterns within datasets. A common goal is to identify subpopulations, often represented as clusters. While traditional clustering algorithms like k-means often assume Gaussian-shaped clusters, real-world data frequently deviates from this assumption. Instead, clusters can have complex shapes, in which case density-based methods like HDBSCAN* work better.

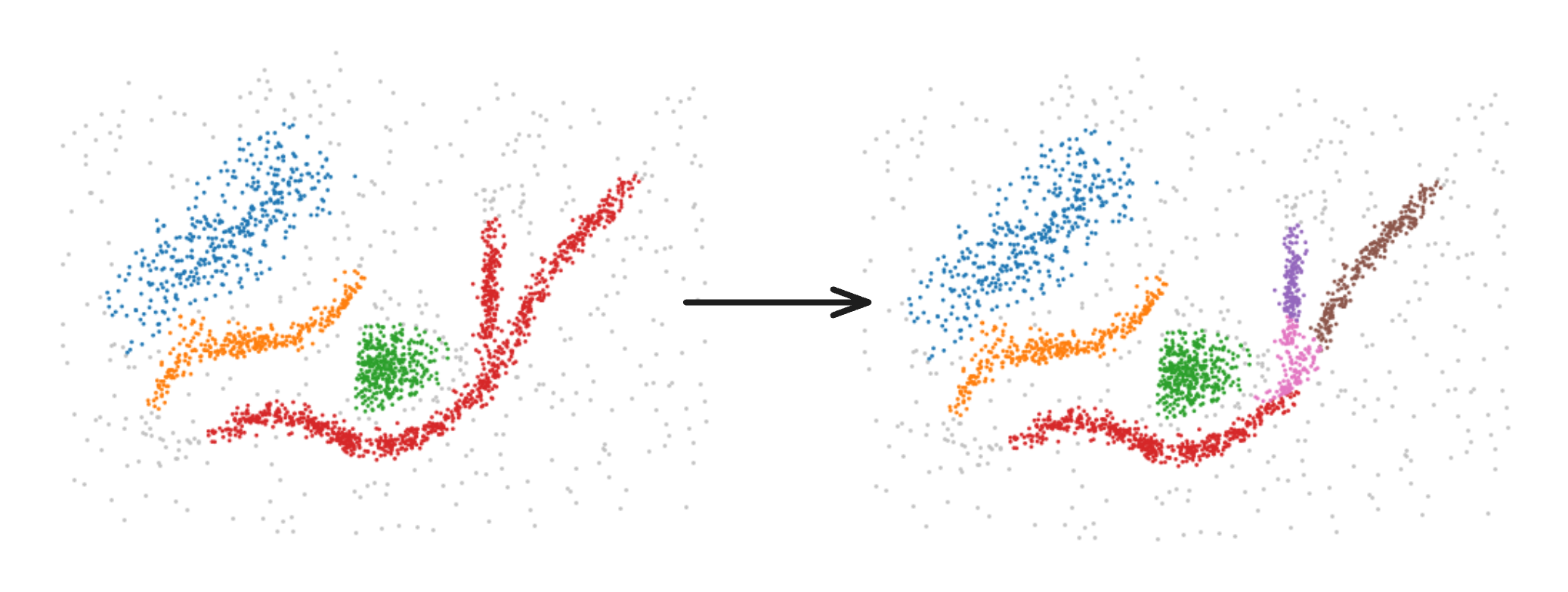

Although it is able to identify “stretched” clusters, HDBSCAN* cannot detect branching structures (aka flares), which can represent meaningful subpopulations.

In our paper “FLASC: A Flare-Sensitive Clustering Algorithm”, we introduce a novel approach that enhances the density-based clustering algorithm HDBSCAN* to detect branches within clusters. This post explores the key concepts, methodology, and applications of FLASC, highlighting its significance in exploratory data analysis.

Traditional clustering algorithms struggle with detecting flares because they rely on gaps between clusters for differentiation. FLASC addresses this limitation by introducing a post-processing step that detects flares within clusters, allowing for the identification of meaningful subpopulations that would otherwise be missed.

The FLASC Algorithm

FLASC extends HDBSCAN* by introducing a post-processing step to detect flares within clusters. The core idea is to analyse the connectivity within clusters and identify branches based on a measure of eccentricity, which describes how far each point is from the cluster’s centroid.

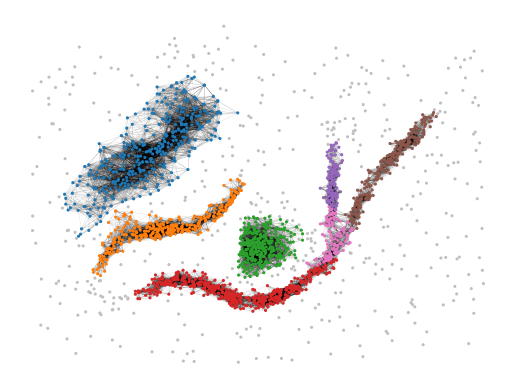

In FLASC, two types of approximation graphs are constructed for each cluster:

- Full Approximation Graph: Includes edges between all points in the cluster, weighted by their mutual reachability distance, capturing the cluster’s connectivity.

- Core Approximation Graph: More conservative, only including edges between points within a certain distance.

These graphs are used to construct an eccentricity contour tree, capturing the branching structure within the cluster. FLASC then identifies flares and classifies data points into different subgroups based on their branch membership.

This is a high-level overview of the FLASC algorithm:

See our paper for a full explanation.

Using FLASC

From the scikit-learn documentation:

import branch_detector from hdbscan

idx = np.argmax([len(x) for x in branch_detector.branch_persistences_])

branch_detector.cluster_condensed_trees_[idx].plot(

select_clusters=True, selection_palette=["C3", "C4", "C5"]

)

plt.ylabel("Eccentricity")

plt.title(f"Branches in cluster {idx}")

plt.show()

This should give you the following figure:

FLASC represents a significant improvement on clustering algorithms, addressing a critical gap in detecting complex structures like flares within clusters. As datasets continue to grow in complexity, branch detection and tools like FLASC will become increasingly important for uncovering subtle, hidden patterns.